It's no news how OpenAI, Deepseek, Claude and other Large Language Models (LLMs) are disrupting the world and have significantly transformed the landscape of engineering workflows, opening the door to more innovative solutions and exciting use cases. In 2023, Snowflake announced a partnership with Microsoft Azure Open AI, the leader in Generative AI and LLMs, to bring generative AI Models and more sophisticated machine learning capabilities to the Data Cloud, which unlocks more exciting data applications.

At Data Culture, we specialize in empowering our clients to harness the full potential of their analytics capabilities to achieve their business objectives. In this article, we delve into a specific case of using AI for good where we helped a Recovery Intelligence company leverage ChatGPT to generate plans and goals for individuals based on assessments taken using dbt to interact with the data in Snowflake using OpenAI's powerful language models. While the use case can vary based on the prompts inputed into ChatGPT, the approach discussed in this article can be adapted across different domains.

This story is about a public benefit company we worked with, focused on addiction recovery, resilience, and mental health.

They leverage a secure platform to administer different standard assessments on topics such as the Patient Health Questionnaire (PHQ), Brief Addiction Monitor (BAM), Brief Inventory of Thriving (BIT), Professional Quality of Life Scale, and other assessments. These assessments follow standards expected by clinicians and other expert care professionals to collect quality data.

Beyond these standard measures, this company developed their own index, a proprietary, multidimensional assessment that is leveraged for comprehensive data capture and collection, which measures all situations, both internal and external, that can affect the entire recovery process of an individual.

The collected data is ingested into Snowflake Datawarehouse using Fivetran, where it is transformed with dbt and integrated with OpenAI for further response generation.

Let's dive into the process and how we ultimately implemented the solution.

Before interacting with ChatGPT and integrating it with your dbt models in Snowflake, you need to set up your Azure OpenAI API and generate your API key.

You can follow the steps :

💡 Base URL sample - https://company_name.openai.azure.com

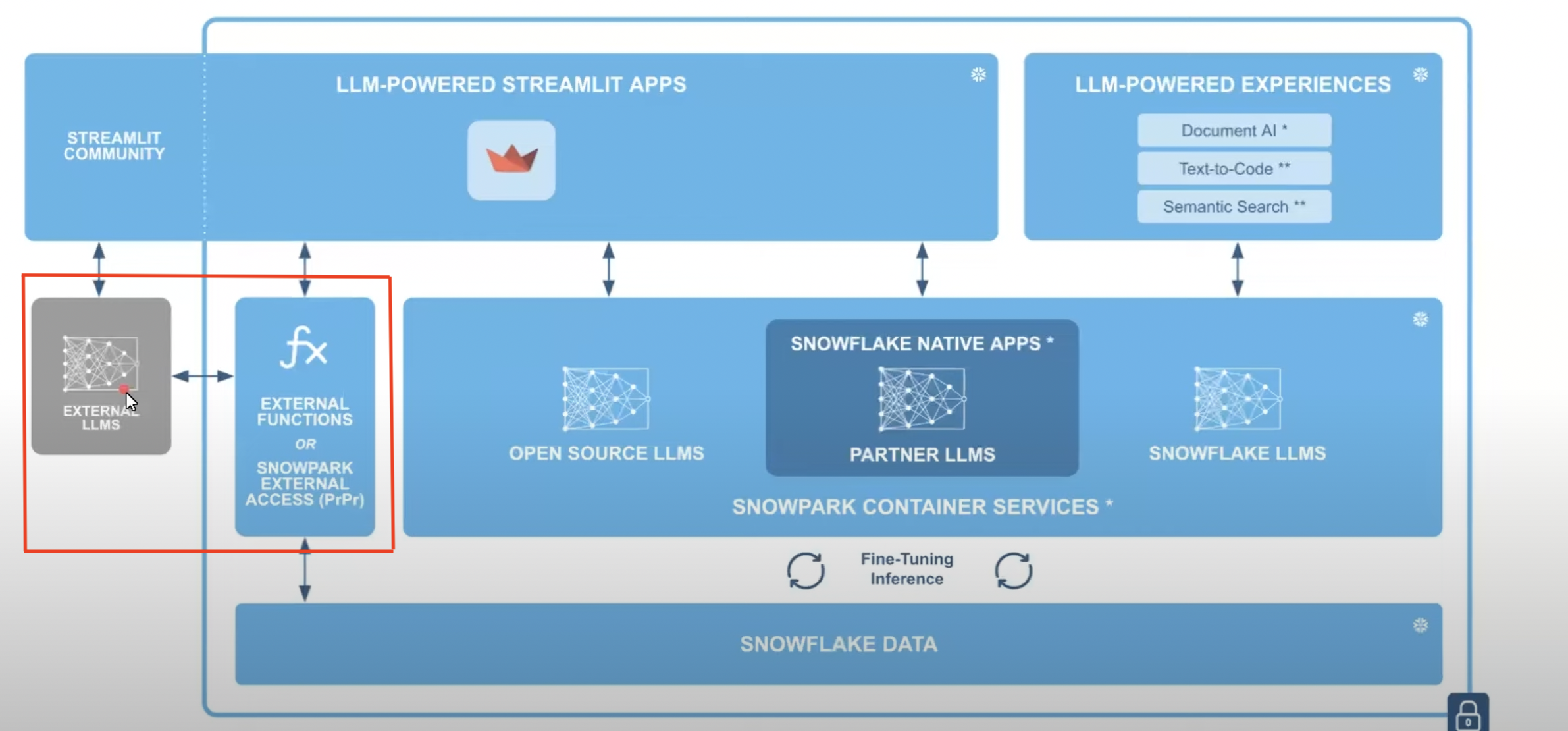

With the launch of External Network Access feature in Snowflake, it is now public, a feature that allows you to create a secure connection to any network external to Snowflake and can be accessed within the User Defined Function (UDF) or Stored Procedure, you can successfully integrate dbt workflow to access OpenAI.

How does Snowflake do this?

This article will leverage the External Function using a UDF created with the required permissions to access the OpenAI resource. As a best practice, we create a new role and grant the necessary privileges to that role to perform the operations needed.

The procedures for setting up the Snowflake integration are as follows:

Step 1: Create a new ROLE and grant usage and access to the necessary databases and schemas in Snowflake to that role. Given that we are using dbt to connect to Snowflake and as best practice, the transformer role is already provisioned for dbt.

CREATE SCHEMA IF NOT EXISTS openai_schema;

GRANT USAGE ON SCHEMA openai_schema TO ROLE TRANSFORMER;

CREATE ROLE if not exists TRANSFORMER;

Step 2: Create a Network rule. A Network rule is a feature in Snowflake that allows you to define which website you allow your user with the role to connect from the Snowflake account.

Recall during the Open AI account setup, a unique base URL was generated; this URL will be used to create the network URL to allow Snowflake to connect to the OpenAI services.

CREATE OR REPLACE NETWORK RULE OPENAI_NETWORK_RULE

MODE = EGRESS

TYPE = HOST_PORT

VALUE_LIST = ('company.openai.azure.com');

Step 3: Create a Secret. The secret is required to safely store the OpenAI key generated during the signup in Snowflake.

-- Create secret with OpenAI Key

CREATE OR REPLACE SECRET OPENAI_API_KEY

TYPE = GENERIC_STRING

SECRET_STRING = '<openai-key>'

Step 4: Create an External Access Integration. This integration allows you to access the outside website from the Snowflake account, and this integration abides by the rule created and defined to access the website. The communication line is secured, and all happens within Snowflake to the URL in an SSL mode. The key that will be used for the integration is the Open AI key generated during the Sign-up

CREATE OR REPLACE EXTERNAL ACCESS INTEGRATION OPEN_AI_INT

ALLOWED_NETWORK_RULES = (OPENAI_NETWORK_RULE)

ALLOWED_AUTHENTICATION_SECRETS = (OPENAI_API_KEY)

ENABLED=TRUE;

Make sure to grant usage on the integration to SYSADMIN so that it can be accessed when the function is created.

Step 5 : Create a Python User Defined Function using the OpenAI package. You do not need to install anything because OpenAI is automatically available as a part of the Snowflake channel in Anaconda. The input parameters passed into the function will allow you to define and control how to interact and control the prompt and the engine ChatGPT uses. The input parameters are where the use case application can vary.

USE ROLE sysadmin;

CREATE OR REPLACE FUNCTION query_chatgpt_prompt(prompt varchar, data varchar)

RETURNS STRING

LANGUAGE PYTHON

RUNTIME_VERSION = '3.8'

PACKAGES = ('requests','openai')

HANDLER = 'get_chat_response'

EXTERNAL_ACCESS_INTEGRATIONS = (OPEN_AI_INT)

PACKAGES = ('openai')

SECRETS = ('cred' = OPENAI_API_KEY )

AS

$$

import _snowflake

import openai

import requests

openai.api_type = "azure"

openai.api_base = "<https://company.openai.azure.com/>"

openai.api_key = _snowflake.get_generic_secret_string(''cred'')

import _snowflake

import openai

def prompt(prompt, engine, max_tokens):

username_password_object = _snowflake.get_username_password('cred');

openai.api_key = username_password_object.password

response = openai.Completion.create(

engine=engine,

prompt=prompt,

max_tokens=max_tokens

)

return response.choices[0].text.strip()

$$;

The Python script defined is a standard Python code. With the Python UDF created, the function can be accessed from any Query through SQL or external tools using dbt. You only need to call the UDF using the function name and pass the required input parameters defined, and you can access ChatGPT with any prompt defined in your parameter.

With Steps 1 & 2 completed, you can leverage Snowflake's output for any downstream applications. To fully automate and orchestrate the entire process and further data transformation of the response generated from ChatGPT, we leverage dbt to automate that workflow.

This tutorial assumes the reader has previous experience using dbt. If this is your first time reading about dbt, it's an open-source data tool that helps analysts and engineers seamlessly manage their data transformation workflow in the warehouse. You can check out the official docs on getting started and the tool's different capabilities. For this project, we leveraged different advanced features of dbt, like incremental materialization, Macros, and Hooks, to orchestrate different aspects of the task.

We leveraged Ralph Kimball's Dimension modelling technique to transform the ingested data in Snowflake into different data assets ready for reporting and downstream analysis. One of our client's needs was to generate recommended goals and plans for each participant who took an assessment based on their result and the defined scale their output result falls into - see ProQOL as an example.

To achieve this while maximizing resources for API calls, we created an incremental data model that transforms all daily assessments and feeds the output to the UDF Function that calls the OpenAI API to generate results for each input parameter based on the prompt configured. It is important to note AI adoption in any use case can only be fully maximized with well-transformed quality data. All the transformation required to prepare the data into the needed format as input parameter to the UDF function was done with dbt.

All the permission provisioning and workflow required to interact with OpenAI successfully defined in Step (2) above is orchestrated automatically by bundling all the queries into dbt Macros and calling in an on-run-start hook as shown below:

Creating UDF Function Macro

{% macro create_plan() %}

CREATE OR REPLACE FUNCTION query_chatgpt_prompt(prompt varchar, data varchar)

RETURNS STRING

LANGUAGE PYTHON

RUNTIME_VERSION = '3.8'

PACKAGES = ('requests','openai')

HANDLER = 'get_chat_response'

EXTERNAL_ACCESS_INTEGRATIONS = (OPEN_AI_INT)

PACKAGES = ('openai')

SECRETS = ('cred' = OPENAI_API_KEY )

AS

$$

import _snowflake

import openai

import requests

openai.api_type = "azure"

openai.api_base = "<https://company.openai.azure.com/>"

openai.api_key = _snowflake.get_generic_secret_string(''cred'')

import _snowflake

import openai

def prompt(prompt, engine, max_tokens):

username_password_object = _snowflake.get_username_password('cred');

openai.api_key = username_password_object.password

response = openai.Completion.create(

engine=engine,

prompt=prompt,

max_tokens=max_tokens

)

return response.choices[0].text.strip();

{% endmacro %}

Granting Permission, Privileges and Calling UDF Function

{% macro udfs() %}

CREATE SCHEMA IF NOT EXISTS openai_schema;

GRANT USAGE ON SCHEMA openai_schema TO ROLE TRANSFORMER;

CREATE ROLE if not exists TRANSFORMER;

-- Create secret with OpenAI Key

CREATE OR REPLACE SECRET OPENAI_API_KEY

TYPE = GENERIC_STRING

SECRET_STRING = '<openai-key>'

GRANT USAGE ON SECRET OPENAI_API_KEY TO ROLE TRANSFORMER;

GRANT READ ON SECRET OPENAI_API_KEY TO ROLE TRANSFORMER;

CREATE OR REPLACE NETWORK RULE OPENAI_NETWORK_RULE

MODE = EGRESS

TYPE = HOST_PORT

VALUE_LIST = ('company.openai.azure.com');

CREATE OR REPLACE EXTERNAL ACCESS INTEGRATION OPEN_AI_INT

ALLOWED_NETWORK_RULES = (OPENAI_NETWORK_RULE)

ALLOWED_AUTHENTICATION_SECRETS = (OPENAI_API_KEY)

ENABLED=TRUE;

GRANT USAGE ON INTEGRATION OPEN_AI_INT TO ROLE TRANSFORMER;

USE ROLE TRANSFORMER;

{{create_plan()}}

{% endmacro %}

The macro udfs() is then added to dbt_project.yml as a hook and the UDF function can be accessed anywhere within the dbt models as seen below:

with json_input AS (

select field

from data

)

select {{target.schema}}.query_chatgpt_prompt(

/*PROMPT*/, json_input.field)

from json_input

The returned response from the UDF function is a JSON object containing each participant's recommended goals and plans. The JSON object is denormalized and transformed into clean Plan and Goals tables using dbt for different reporting and analytics purposes

The returned response from the UDF function is a JSON object containing each participant's recommended goals and plans. The JSON object is denormalized and transformed into clean Plan and Goals tables using dbt for reporting purposes.

And just like that, you have ChatGPT plugged into dbt.

We hope this tutorial helps you adapt the approach to your specific use case and continue leveraging AI to solve interesting problems.