A step-by-step methodology for building data models that actually solve business problems

One of the biggest challenges in data modelling isn't technical, it's methodological. While there is extensive documentation on how to build star schemas, optimise performance, and write efficient transformations, there is surprisingly little guidance on a systematic approach to data modelling projects.

Many data professionals learn modelling techniques in isolation: dimensional modelling principles, normalisation rules, and performance optimisation strategies. However, when it comes to approaching a new project from start to finish, or trying to reverse-engineer a poorly built data model into more scalable data assets that solve business needs, they often find themselves jumping between different phases without a clear framework.

The result? Projects that feel reactive rather than strategic, models that solve technical problems but miss business needs, and teams that spend more time rebuilding than building.

At Data Culture, we’ve had the opportunity to build data models across various industries and teams. The goal of this article is to share a systematic approach that we leverage to navigate from initial business requirements to production-ready models. This framework provides structure while remaining flexible enough to adapt to different contexts and constraints. Additionally, the framework discussed in this blog synthesises ideas and learnings from Agile Data Warehouse Design and the Data Warehouse Toolkit, which we’ve adopted across all our projects.

Before diving into the details, here's a high-level overview of our systematic data modelling approach:

This phase builds upon the previous one, with continuous stakeholder collaboration and iterative feedback loops throughout the entire process.

Traditional data modelling often follows a Big-Design-Up-Front (BDUF) or waterfall methodology, where extensive planning and documentation occur before any implementation begins. While this approach works in some contexts, it can be suboptimal in today's fast-paced business environment, where requirements evolve rapidly and stakeholders need to see value quickly.

The challenge with BDUF in data modelling:

A more effective approach combines:

Just-Enough-Design-Up-Front (JEDUF): Doing sufficient planning to understand the problem space and general direction without over-engineering solutions before understanding real usage patterns.

Just-In-Time (JIT) design: Making detailed technical decisions when you have enough information to make them well, rather than guessing early in the process.

Collaborative and iterative methodology: Involving stakeholders throughout the process rather than treating them as requirements providers at the beginning and end users at the end.

This agile approach to data modelling emphasises rapid feedback loops, stakeholder collaboration, and iterative development that delivers value incrementally while building toward a comprehensive solution.

This framework incorporates these agile principles into three distinct but overlapping phases. Each phase involves collaboration between data modellers and stakeholders, with multiple opportunities for feedback and iteration.

Why This Stage Is Critical:

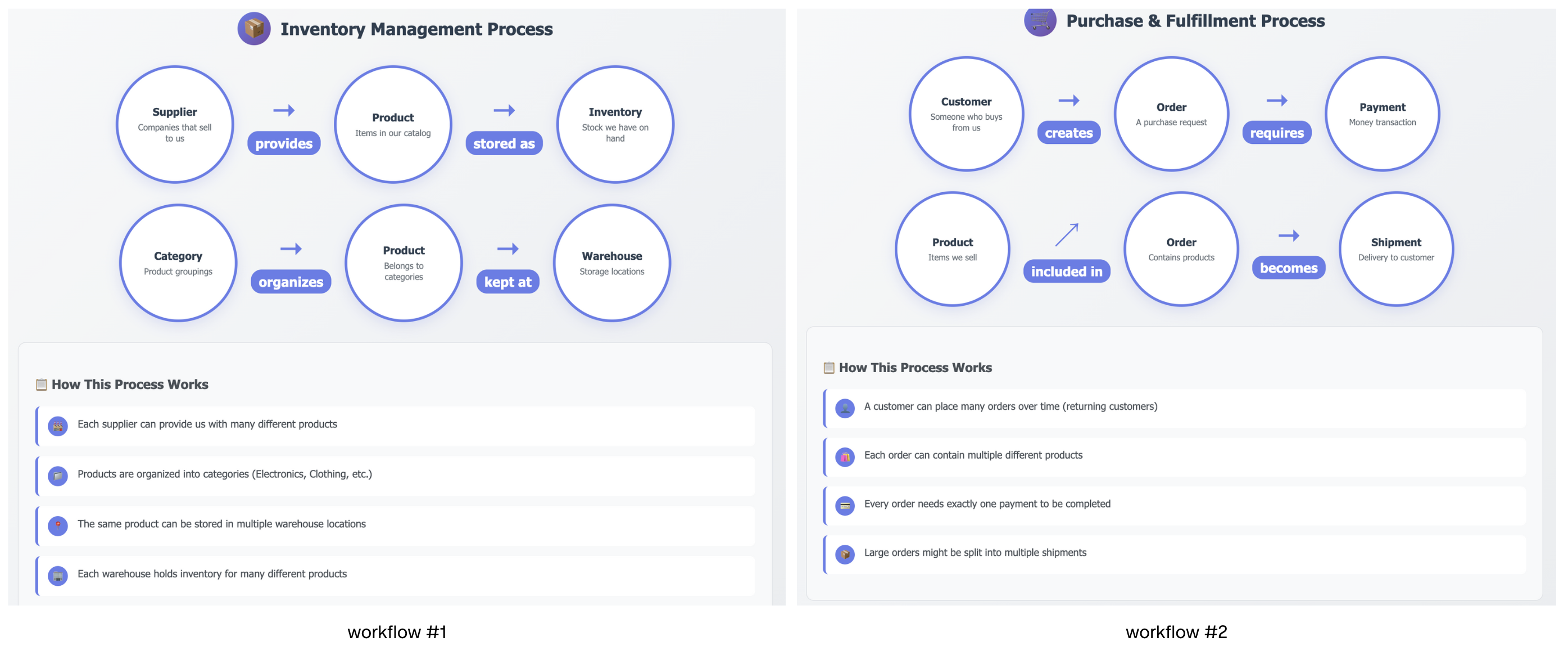

The Conceptual Data Modelling (CDM) phase fosters a two-way dialogue that enables data modellers to gain deep insights into stakeholder needs while also allowing stakeholders to share their wishlist and reporting requirements. This collaborative approach is essential because many of the stakeholders involved in these discussions will be the actual consumers and users of the final analytics reports.CDM operates at the big-picture level of data modelling, where you focus on how different entities and objects connect as a workflow rather than getting bogged down in technical details. Think of it like mapping a customer journey through any business process. Success at this stage depends on your ability to listen and pay attention to the business process owners who interact daily with the systems that generate data behind the scenes, as well as ask important questions to help get more context into the different workflows. A data model is only as valuable as its connection to business needs and its ability to make a meaningful impact. Achieving that level of impact with data modelling largely depends on a data modeller's ability to understand the business process workflow, the language used, the entities involved, core concepts, and specific nuances that need to be captured downstream in the logical and physical design phases.

Avoiding Common Pitfalls:

Many data modellers begin by worrying about implementation specifics without first understanding the language used by the business. They jump into technical details without gaining clarity on the big picture. CDM provides an opportunity to include non-technical downstream users in the data model design phase, empowering them to understand the data model workflow when it's communicated to them.

The CDM Process:Step 1: Collaborative Discovery Sessions

Example Questions to Ask:

Step 2: Entity Workflow Journey Mapping

Step 3: Iterative Use Case Validation

CDM Deliverable: A collaborative visual business process map with clearly defined entities, relationships, and prioritised use cases that stakeholders helped create and genuinely support because they participated in the design process.

Below is a fictitious sample use case

Red Flags in CDM Phase:

- Talking about tables or technical implementation too early

- Assuming you understand the business domain without collaborative validation

- Rushing through this phase to "get to the real work" (this IS part of the real work)

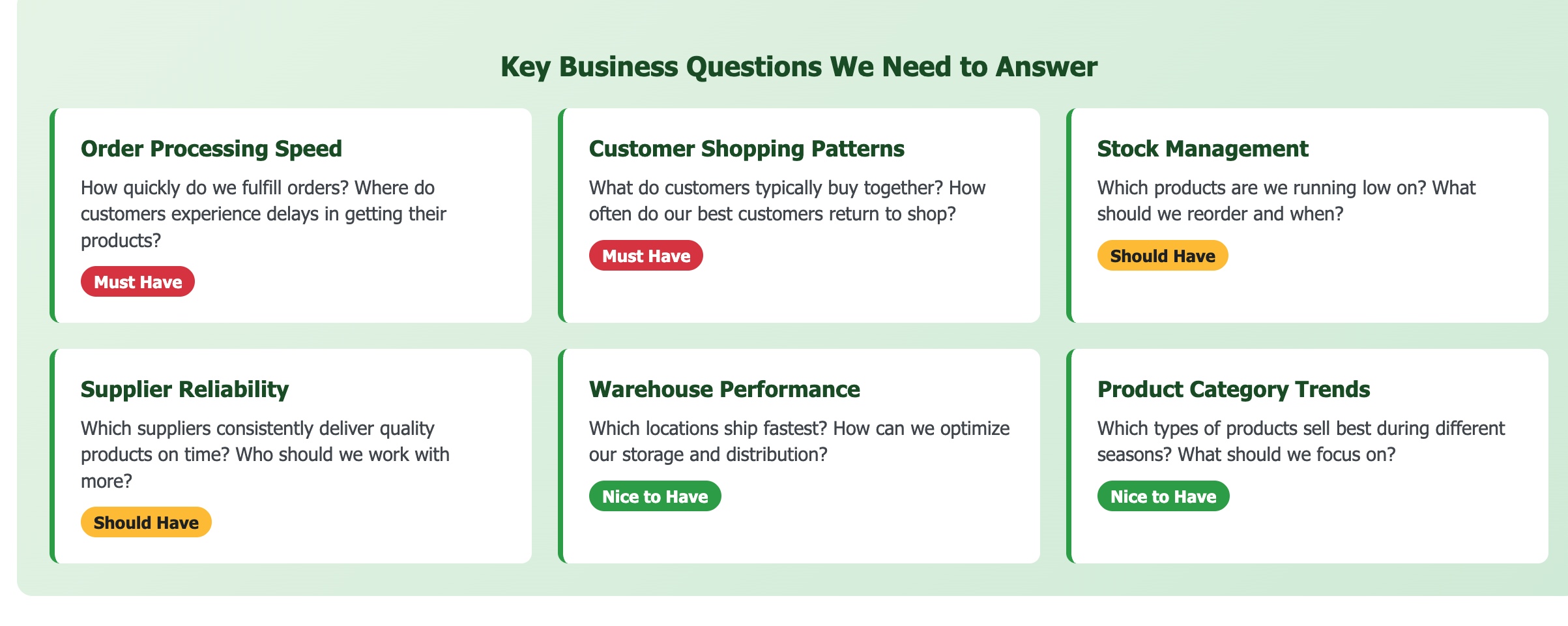

Goal: Translate business requirements into a data structure design while maintaining collaborative alignment with stakeholders.

Once the CDM phase is complete, you have a solid understanding of the various components and requirements that must be met. However, before diving into the specifics of actual logical or physical data model implementation, there's a critical intermediate step that bridges business requirements and technical design.

The Wireframe Validation Process:

At Data Culture, we approach Data modelling with our Clients as strategic thought partners, which I strongly advocate that analytics engineers integrate into their modelling approach. After gathering all business requirements and developing a clear overview of the conceptual data model workflow, analytics engineers collaborate with data strategists to create mockups and wireframes that integrate all use cases and insights communicated during the initial discovery stage. These wireframe dashboard designs are reviewed based on an initial understanding of available data sources to gauge data readiness against requirements.

This step is crucial for avoiding overcommitment and setting realistic expectations based on the current state of the data. Once wireframes are reviewed and business stakeholders provide feedback on usability and experience, this collaborative alignment offers clear direction for both logical and physical data modelling. In the absence of a Data Strategist, an Analytics Engineer can work with the Data Analyst to create this mockup, and if you are a solo data team of ONE, then owning this workflow of creating a simple mockup in Miro or Figma or any visual tool would be a great resource toward achieving a collaborative data model design that's impactful.

At the Logical Data Model stage, you're not concerned with table optimisation or materialisation strategies. Instead, you go a level deeper beyond the conceptual model to think through what fields (attributes) will be available in different tables (entities) and determine the most appropriate modelling approach, whether that's Star Schema for analytics use cases or any other approach that fits the purpose. No single modelling approach is a universal solution.

The Logical Data Modelling stage requires significant thinking and brainstorming to connect data sources to business needs. This stage reinforces the idea of "Data Modelling as an Art." While there are established rules and standards for building and optimising physical tables (which can be considered a science), the logical aspect of data modelling is often opinion-driven and heavily influenced by the nuances of different business operations. This is where Analytics engineers demonstrate their core value: the ability to translate specificity, nuances, complexity, and constantly changing business processes into scalable data models that provide genuine business value. This human insight and adaptability to workflow complexity make this aspect of data modelling particularly difficult to automate or replace with AI.

The goal of a Logical Data Model is to build a solution that solves problems. This is why this phase must stem from a solid understanding and alignment with business stakeholders, helping to reduce the risk of over-engineering or trying to capture every possible future requirement in the initial design.

Step 1: Requirements Translation

Step 2: Modelling Approach Selection

Step 3: Business Logic Definition

Step 4: Wireframe Validation

LDM Deliverable: A complete logical data model with defined entities, attributes, relationships, and business rules that map directly to validated business use cases.

Common LDM Mistakes:

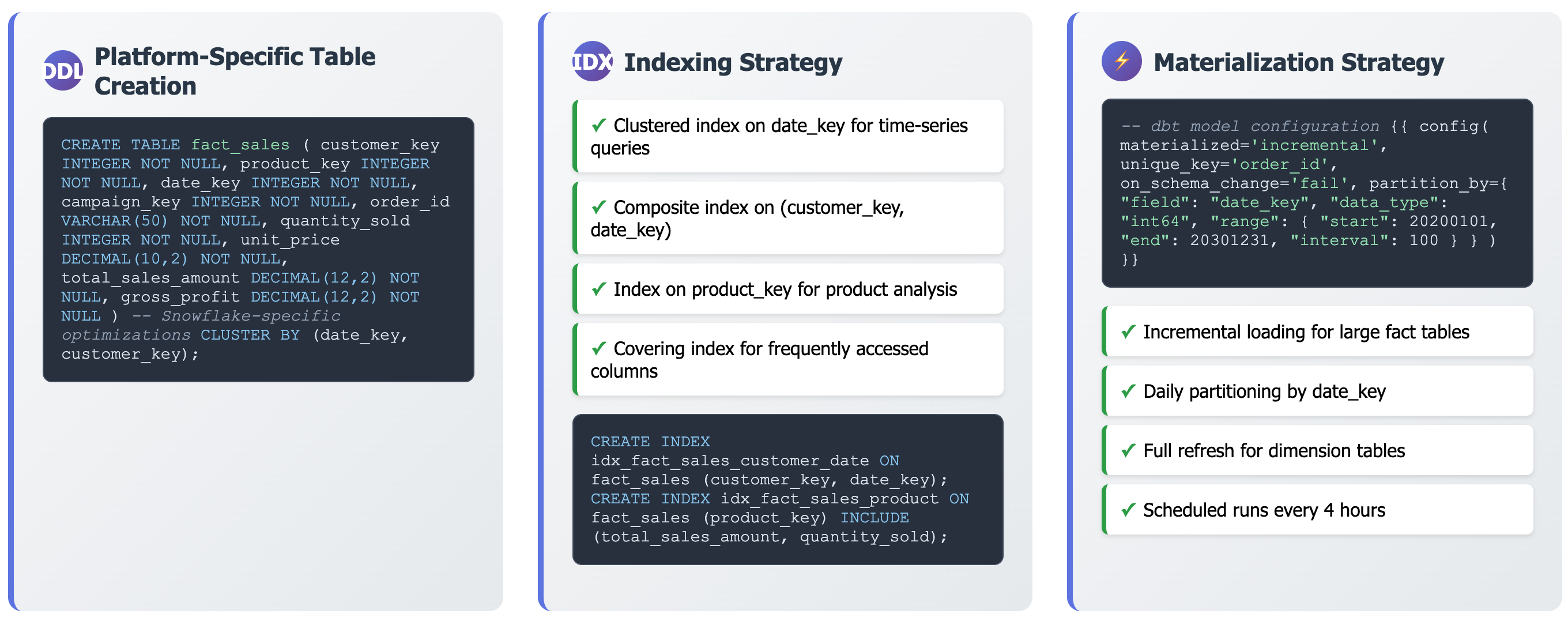

Goal: Implement the logical model with optimal performance, storage, and maintenance characteristics for production use.

This is where the science of data modelling takes centre stage. Now, it's time to focus on optimisation strategies, materialisation approaches, precise data types, and constraints for testing purposes. Technical decisions become critical: Should NULL values be allowed in specific fields? Should boolean fields use true/false or 0/1?

At this stage, you dive into the technical details of data structure, format, storage specifications, table update mechanisms, and how downstream users will consume the data. There are extensive resources available for this aspect of data modelling, and you should leverage those that align with your specific data platform and implementation tools.

Step 1: Platform-Specific Design

Step 2: Implementation Architecture

Step 3: Data Quality Framework

Step 4: Performance Optimisation

PDM Deliverable: A production-ready data model with optimised performance, comprehensive testing, and clear documentation that supports both current needs and future growth.

Approaching data modelling with the structured methodology described in this framework, starting from a bird's-eye view that addresses the "what" driven by business needs rather than jumping directly into tools or technical details, enables effective communication and engagement with business stakeholders. This approach helps create data models that solve real-world problems and make a meaningful impact rather than building sophisticated yet underutilised technical solutions.

Data modelling combines technical skills with systematic thinking. While mastering SQL and other tools is necessary, adopting proper data modelling methodology is important, and most importantly, developing a consistent framework and approach to projects can be equally valuable.

The framework provides a structure for navigating complexity. Whether you're working with a simple analytics use case or a complex enterprise data model, having a systematic approach helps ensure that important considerations aren't overlooked.

Consider adapting this framework to your team's specific context and constraints. The goal isn't to follow it rigidly but to develop a consistent methodology that helps you build models that effectively solve real business problems.